Google and Facebook tackling suicide AI is not working properly in the UK and Egypt. The two internet giants may encourage people to commit suicide if they do the search using Arabic language, female pronouns or the word “die” instead of “suicide” in English.

The world’s top search engine and social network had amended their search algorithm to surface helplines and prioritise tackling suicide material when a user searches for suicide related content. However, a recent investigation found that the AI doesn’t provide this service in Egypt and the UK in some cases.

The investigation titled “The Death Algorithm” was broadcast by Alghad TV, news and current affairs broadcasts from London and Cairo, and its based on testing Google and Facebook search algorithm in Arabic and English from Egypt and Britain.

The investigation was produced and presented by Ahmed Elsheikh, Digital Media Expert and Member of the New Media Council at the National Union of Journalists in the United Kingdom and Ireland.

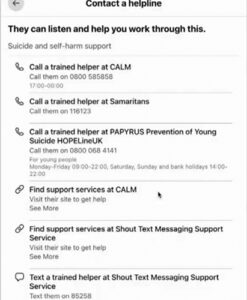

The documentary found that if search is done in English from the UK, both websites surface details of the suicide prevention helpline followed by articles or posts on tackling suicide.

When the search was done in Arabic from the UK, the top result was the helpline followed by posts or articles which explains in detail suicide methods and may encourage suicide.

The results were worse when the search was done in Egypt. Neither of the websites mentioned the helpline when the search was done in Arabic. And they surfaced the helpline only when the search was don in English.

Elsheikh has been following this case for three years and he noticed minor improvement in Google algorithm as it started to surface the helpline details when search is done in Arabic from the UK. This service was not available when he wrote his first article about the topic in December 2019.

“The results prove that the internet giants, Google and Facebook, should improve their AI in order to provide the same service and quality in all countries and all languages especially in the Middle East where suicide is a serious issue. There has been a recent case in Egypt where a suicide case was live streamed on Facebook”, Elsheikh said.

The documentary interviewed Professor Mohamed Abdel-Muguid, Pro Vice-Chancellor for Science, Technology, Engineering and Medicine (STEM) at Canterbury Christ Church University, who tested Google search algorithm in Arabic and English from his lab in the University. Prof Abdel-Muguid found that Google algorithm failed to detect suicide thoughts in two cases; when he used a female pronoun in Arabic and when he searched for “I want to die” instead of “I want to suicide” in English. “The experiment proved that Google AI may have flaws in Arabic and English”, Abdel-Muguid Said.

“The Death Algorithm” explained the case of Molly Russel, the British teenage whose family believes she committed suicide because she watched self harm content on Instagram. Meta, the owning company of Instagram, is to provide copies of the material which were viewed by Russel prior to her death and an inquest is will be launched to decide whether instagram algorithm encouraged her to take her own life.

The documentary quoted the report of the Royal College of Psychiatrists calling for forcing social media platforms to hand over their data in order to tackle self harm content. The documentary interviewed two psychiatrists, Dr Gamal Ferwiz from Egypt and Dr Mahmoud Lawaty from the UK, and both agreed that users could commit or avoid suicide based on the nature of material that they watch online.

The investigation discussed regulating social media and interviewed Dr Aysem Vanberg Lecturer in Law at the Department of Law, at Goldsmiths, University of London, who contributed in a written statement to the House of Commons on the Online Safety Bill. Vanberg stressed the need of regulating social media in order to self ham content. However, she criticised the Online Safety Bill as it’s based on vague definitions and don’t tackle the issue properly.

Discussion about this post