If you’ve ever listened to early computer-generated voices, you probably remember how stiff and robotic they sounded. Flat tone. Awkward pauses. Zero emotion. It worked for basic functionality, but it never felt human.

Fast forward to today, and the difference is striking.

Modern AI-generated voices can tell stories, explain complex ideas, and even convey emotion with surprising realism. They pause naturally, emphasize the right words, and adapt their tone depending on the context. What once felt mechanical now feels conversational—and in many cases, almost indistinguishable from a real human speaker.

So what changed? The answer lies in how artificial intelligence now understands language, emotion, and human speech patterns at a much deeper level.

Why “Human-Sounding” Voice Matters More Than Ever

Voice technology is no longer limited to accessibility tools or navigation systems. It’s everywhere:

- E-learning platforms

- Audiobooks and podcasts

- Customer support and IVR systems

- Marketing videos and product demos

- Smart assistants and mobile apps

As voice becomes a primary interface, users expect it to feel natural. When a voice sounds robotic, it creates friction, reduces trust, and shortens engagement time. On the other hand, a natural-sounding voice builds comfort, credibility, and emotional connection.

In fact, studies in user experience design show that people are more likely to trust and retain information from voices that sound warm, expressive, and human-like. This has pushed companies to invest heavily in AI-driven voice realism.

The Evolution From Rule-Based Speech to AI Intelligence

Early voice systems relied on predefined rules. Engineers manually programmed pronunciation, pitch, and pacing. While functional, these systems lacked flexibility and nuance.

AI changed that by introducing learning instead of rigid instruction.

From Scripts to Learning Models

Modern voice systems are trained on massive datasets containing thousands of hours of human speech. These datasets allow AI models to learn:

- How humans naturally pause in conversation

- Where emphasis typically falls in a sentence

- How tone changes with emotion or intent

- How speech varies across accents and languages

Instead of following rules, AI predicts how speech should sound based on context.

Natural Language Processing: Teaching Machines Meaning

One of the biggest breakthroughs came from advances in Natural Language Processing (NLP).

NLP allows AI to understand not just words, but meaning. This includes:

- Sentence structure

- Context and intent

- Emotional cues

- Relationships between words

For example, the sentence “That’s great.” can sound genuinely enthusiastic or deeply sarcastic depending on context. Older systems couldn’t detect the difference. AI-powered models can.

This understanding is essential for producing speech that feels intentional rather than robotic.

Prosody: The Secret to Human-Sounding Speech

Prosody refers to the rhythm, stress, and intonation of speech. It’s what makes spoken language feel alive.

AI models now analyze prosody patterns by studying how humans speak in different situations:

- Storytelling

- Instruction

- Casual conversation

- Formal announcements

By mimicking these patterns, AI can generate voices that:

- Speed up or slow down naturally

- Raise or lower pitch for emphasis

- Insert realistic pauses

- Adjust tone based on emotional context

This is a major reason modern voice outputs feel dramatically more human than earlier versions.

Deep Learning and Neural Voices

Neural networks—particularly deep learning models—are at the heart of today’s most realistic voices.

How Neural Voices Work

Neural voice systems don’t stitch together pre-recorded sounds. Instead, they generate speech waveforms dynamically, producing smooth transitions between sounds.

Benefits include:

- More natural pronunciation

- Consistent voice quality

- Greater emotional range

- Reduced distortion and artifacts

In the middle of this transformation, text to speech systems powered by neural networks have moved beyond accessibility tools into fully immersive communication technologies.

Emotional Intelligence in AI Voices

One of the most impressive developments is emotional modeling.

AI can now:

- Detect emotional tone in written text

- Match voice output to emotional context

- Simulate empathy, excitement, or calmness

This is particularly valuable in areas like:

- Mental health applications

- Customer service interactions

- Educational content

- Story-driven media

A calm voice can reduce frustration. An enthusiastic tone can boost engagement. Emotional alignment makes interactions feel more human and less transactional.

Multilingual and Accent Adaptation

Human speech isn’t universal—it’s deeply influenced by culture, language, and accent.

AI now supports:

- Dozens of languages

- Regional accents and dialects

- Code-switching between languages

- Localized pronunciation

This makes voice technology more inclusive and globally scalable, allowing businesses and creators to reach diverse audiences without losing authenticity.

Actionable Ways Businesses Can Use Human-Like Voice AI

If you’re considering integrating AI voice technology, here are practical strategies to maximize impact:

1. Match Voice to Brand Personality

Choose a voice style that reflects your brand—professional, friendly, energetic, or calm.

2. Use Context-Aware Scripts

Write conversational scripts, not formal text. AI performs best with natural language.

3. Test Emotional Variations

Experiment with tone settings for different use cases like onboarding, support, or marketing.

4. Prioritize Accessibility

Use voice features to support users with visual impairments, learning differences, or language barriers.

5. Continuously Optimize

Gather user feedback and adjust voice settings over time to improve engagement.

Ethical Considerations and Transparency

As voices become more human-like, ethical concerns emerge.

Key considerations include:

- Clear disclosure when voices are AI-generated

- Avoiding deceptive impersonation

- Protecting voice data privacy

- Preventing misuse in misinformation

Responsible development ensures trust remains intact as technology advances.

What the Future Holds

AI-generated voices will continue to evolve rapidly. We can expect:

- Hyper-personalized voice experiences

- Real-time emotional adaptation

- Seamless integration with AR and VR

- Voice agents capable of long-form conversation

As these systems improve, the gap between human and machine speech will continue to narrow—making voice one of the most natural ways we interact with technology.

Final Thoughts

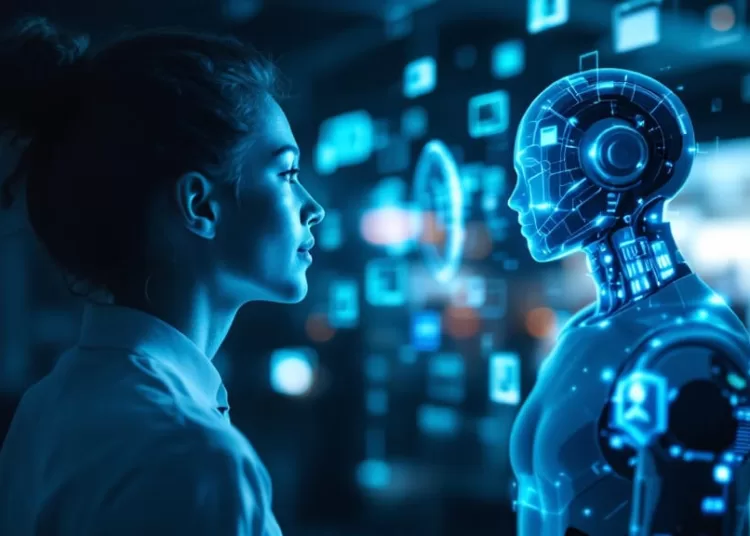

The journey from robotic monotone to expressive, human-like voice is one of AI’s most impressive achievements. By learning how people speak, feel, and communicate, AI has transformed voice technology into a powerful bridge between humans and machines.

As businesses, creators, and platforms adopt these tools, the focus should remain on empathy, clarity, and authenticity. Because the most effective technology doesn’t just speak—it connects.